Zipline in the Cloud

Thomas Wiecki

Source: http://www.mountainexposures.co.uk

About me

- PhD student at Brown University.

- Computational Cognitive Neuroscience.

- Quantitative Researcher at Quantopian.

- Optimizing trading algorithms.

Algorithmic Trading

- Algorithm makes trading decision automatically, based on external events (price, volume, twitter trends...)

Backtesting

Zipline http://zipline.io/

- Open-source backtester written in Python.

- Simulates real trading:

- Transaction costs

- Slippage

- Streaming of financial data:

- prevents look-ahead bias.

- portable to live-trading.

- Batteries included: moving average, alpha, beta, Sharpe ratio...

- Used in production on Quantopian.

Story-Time

Posted article on trading strategy on Quantopian forums:

In [9]:

from IPython.display import HTML

HTML("<iframe src=https://www.quantopian.com/posts/olmar-implementation-fixed-bug width=1100 height=350></iframe>")

Out[9]:

In [1]:

%pylab inline

import sys

import logbook

zipline_logging = logbook.NestedSetup([

logbook.NullHandler(level=logbook.DEBUG, bubble=True),

logbook.StreamHandler(sys.stdout, level=logbook.INFO),

logbook.StreamHandler(sys.stderr, level=logbook.ERROR),

])

zipline_logging.push_application()

import numpy as np

import datetime

Welcome to pylab, a matplotlib-based Python environment [backend: module://IPython.kernel.zmq.pylab.backend_inline]. For more information, type 'help(pylab)'.

Here, I ported the algorithm to Zipline:

In [2]:

import zipline

STOCKS = ['AMD', 'CERN', 'COST', 'DELL', 'GPS', 'INTC', 'MMM']

class OLMAR(zipline.TradingAlgorithm):

def initialize(self, eps=1, window_length=5):

self.stocks = STOCKS

self.m = len(self.stocks)

self.price = {}

self.b_t = np.ones(self.m) / self.m

self.last_desired_port = np.ones(self.m) / self.m

self.eps = eps

self.init = True

self.days = 0

self.window_length = window_length

self.add_transform(zipline.transforms.MovingAverage, 'mavg', ['price'],

window_length=window_length)

self.set_slippage(zipline.finance.slippage.FixedSlippage())

self.set_commission(zipline.finance.commission.PerShare(cost=0))

def handle_data(self, data):

self.days += 1

if self.days < self.window_length:

return

if self.init:

self.rebalance_portfolio(data, self.b_t)

self.init = False

return

m = self.m

x_tilde = np.zeros(m)

b = np.zeros(m)

# find relative moving average price for each security

for i, stock in enumerate(self.stocks):

price = data[stock].price

# Relative mean deviation

x_tilde[i] = data[stock]['mavg']['price'] / price

###########################

# Inside of OLMAR (algo 2)

x_bar = x_tilde.mean()

# market relative deviation

mark_rel_dev = x_tilde - x_bar

# Expected return with current portfolio

exp_return = np.dot(self.b_t, x_tilde)

weight = self.eps - exp_return

variability = (np.linalg.norm(mark_rel_dev))**2

if variability == 0.0:

step_size = 0 # no portolio update

else:

step_size = max(0, weight/variability)

b = self.b_t + step_size*mark_rel_dev

b_norm = simplex_projection(b)

#print b_norm

self.rebalance_portfolio(data, b_norm)

# Predicted return with new portfolio

pred_return = np.dot(b_norm, x_tilde)

# update portfolio

self.b_t = b_norm

def rebalance_portfolio(self, data, desired_port):

#rebalance portfolio

desired_amount = np.zeros_like(desired_port)

current_amount = np.zeros_like(desired_port)

prices = np.zeros_like(desired_port)

if self.init:

positions_value = self.portfolio.starting_cash

else:

positions_value = self.portfolio.positions_value + self.portfolio.cash

for i, stock in enumerate(self.stocks):

current_amount[i] = self.portfolio.positions[stock].amount

prices[i] = data[stock].price

desired_amount = np.round(desired_port * positions_value / prices)

diff_amount = desired_amount - current_amount

for i, stock in enumerate(self.stocks):

self.order(stock, diff_amount[i]) #order_stock

def simplex_projection(v, b=1):

"""Projection vectors to the simplex domain

Implemented according to the paper: Efficient projections onto the

l1-ball for learning in high dimensions, John Duchi, et al. ICML 2008.

Implementation Time: 2011 June 17 by Bin@libin AT pmail.ntu.edu.sg

Optimization Problem: min_{w}\| w - v \|_{2}^{2}

s.t. sum_{i=1}^{m}=z, w_{i}\geq 0

Input: A vector v \in R^{m}, and a scalar z > 0 (default=1)

Output: Projection vector w

:Example:

>>> proj = simplex_projection([.4 ,.3, -.4, .5])

>>> print proj

array([ 0.33333333, 0.23333333, 0. , 0.43333333])

>>> print proj.sum()

1.0

Original matlab implementation: John Duchi (jduchi@cs.berkeley.edu)

Python-port: Copyright 2012 by Thomas Wiecki (thomas.wiecki@gmail.com).

"""

v = np.asarray(v)

p = len(v)

# Sort v into u in descending order

v = (v > 0) * v

u = np.sort(v)[::-1]

sv = np.cumsum(u)

rho = np.where(u > (sv - b) / np.arange(1, p+1))[0][-1]

theta = np.max([0, (sv[rho] - b) / (rho+1)])

w = (v - theta)

w[w<0] = 0

return w

Simplified version

In [ ]:

import zipline

class OLMARb(zipline.TradingAlgorithm):

def initialize(self, eps=1, window_length=5):

# How aggresive should we rebalance the portfolio

self.eps = eps

# How many days to compute the moving average over

self.window_length = window_length

# Include mavg transform into simulation. Makes it available

# in handle_data() below.

self.add_transform(zipline.transforms.MovingAverage, 'mavg', ['price'],

window_length=window_length)

def handle_data(self, data):

# Calculate how much each price diverged from its moving average

for stock in data:

deviation_from_mavg[stock] = data[stock].price - data[stock].mavg

# Calculate new portfolio allocation

desired_portfolio = current_portfolio + self.eps * deviation_from_mavg

# Rebalance portfolio

self.rebalance_portfolio(desired_portfolio)

Import data from yahoo finance.

In [3]:

start = datetime.datetime(2001, 8, 1)

end = datetime.datetime(2013, 2, 1)

In [4]:

data = zipline.utils.load_from_yahoo(stocks=STOCKS, indexes={}, start=start, end=end)

AMD CERN COST DELL GPS INTC MMM

In [5]:

data = data.dropna()

In [6]:

data.head()

Out[6]:

| AMD | CERN | COST | DELL | GPS | INTC | MMM | |

|---|---|---|---|---|---|---|---|

| Date | |||||||

| 2001-08-01 00:00:00+00:00 | 18.90 | 13.51 | 35.70 | 26.75 | 23.42 | 24.23 | 41.88 |

| 2001-08-02 00:00:00+00:00 | 19.76 | 13.47 | 36.67 | 27.98 | 23.55 | 25.30 | 41.99 |

| 2001-08-03 00:00:00+00:00 | 19.25 | 13.44 | 36.35 | 27.63 | 23.25 | 24.97 | 42.16 |

| 2001-08-06 00:00:00+00:00 | 17.62 | 13.88 | 35.18 | 27.40 | 23.03 | 23.87 | 41.27 |

| 2001-08-07 00:00:00+00:00 | 17.55 | 13.92 | 35.63 | 27.27 | 23.03 | 24.14 | 41.83 |

Let's see how it performs...

In [4]:

def run_olmar(eps=1, window_length=5):

olmar = OLMAR(eps=eps, window_length=window_length)

results = olmar.run(data)

return results.portfolio_value

In [12]:

results_eps2 = run_olmar(eps=2)

[2013-03-07 22:11] INFO: Transform: Running StatefulTransform [mavg] [2013-03-07 22:12] INFO: Transform: Finished StatefulTransform [mavg] [2013-03-07 22:12] INFO: Performance: Simulated 2893 trading days out of 2893. [2013-03-07 22:12] INFO: Performance: first open: 2001-08-01 13:30:00+00:00 [2013-03-07 22:12] INFO: Performance: last close: 2013-02-01 21:00:00+00:00

In [14]:

results_eps2.plot()

Out[14]:

<matplotlib.axes.AxesSubplot at 0xba48b8c>

Can we do better?

In [25]:

results_eps1 = run_olmar(eps=1)

[2013-03-07 22:44] INFO: Transform: Running StatefulTransform [mavg] [2013-03-07 22:45] INFO: Transform: Finished StatefulTransform [mavg] [2013-03-07 22:45] INFO: Performance: Simulated 2893 trading days out of 2893. [2013-03-07 22:45] INFO: Performance: first open: 2001-08-01 13:30:00+00:00 [2013-03-07 22:45] INFO: Performance: last close: 2013-02-01 21:00:00+00:00

In [26]:

results_eps2.plot()

results_eps1.plot(c='g')

Out[26]:

<matplotlib.axes.AxesSubplot at 0xd6b49cc>

Parameter Optimization

Find parameter values eps and window_length that maximize objective function (i.e. cumulative wealth).

A solved problem?

Lots of optimization algorithms exist, for example:

- Hill climbing / Gradient ascent

- Convex optimization

Requirements and Properties:

- Continuous, differentiable.

- Convex

- Serial

Our objective function:

- Might be discontinuous,

window_lengthis discrete. - Might be multi-modal.

- Slow to compute -> need parallelism.

This is a more general problem, referred to as hyper-parameter optimization.

Support Vector Machine

- Parameters:

- Normal-vector of hyperplane $\leftarrow$ Convex

- Hyper-parameters:

- Soft margin parameter

C. - Kernel-type (RBF, polynomial) and associated parameter.

- Soft margin parameter

Computational Neuroscience

- Parameters:

- Weights $\leftarrow$ Continuous.

- Hyper-parameters:

- Network architecture (number of hidden units and layers)

Algorithmic Trading

- Parameter:

- Portfolio-allocation.

- Hyperparameters:

epsandwindow_length.

The problem thus far

- Large-scale models.

- Expensive to compute.

- No knowledge how objective function changes w.r.t. hyper-parameters.

Required

- Parallelism

- Global methods (not gradient-descent)

- Grid-search

- Bayesian Optimization

Parallel Computing in Python

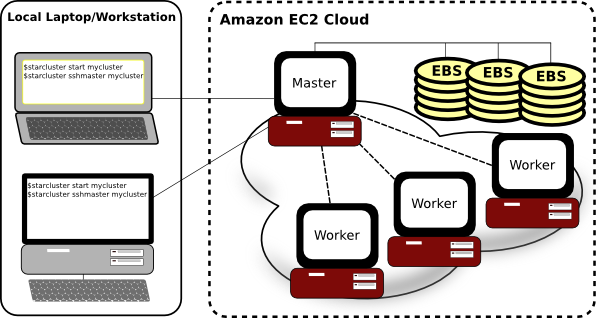

Launch IPython cluster.

- command line: ipcluster

- IPython notebook

Connect to master

In [9]:

from IPython.parallel import Client

client = Client()

queue = client.direct_view()

print "Available workers: ", len(queue)

Available workers: 2

In [10]:

squared = queue.map_sync(lambda x: x**2, [1, 2, 3, 4])

print squared

[1, 4, 9, 16]

%%px magic allows execution of cell on all nodes

In [3]:

%%px

import numpy as np

print np.linspace(0, 1, 5)

[stdout:0] [ 0. 0.25 0.5 0.75 1. ] [stdout:1] [ 0. 0.25 0.5 0.75 1. ]

Great -- can run models in parallel on my laptop!

In [5]:

!cat ~/.starcluster/config_pydata

[global] INCLUDE=~/.starcluster/aws, ~/.starcluster/keys DEFAULT_TEMPLATE=pydata [cluster pydata] KEYNAME = mykey CLUSTER_SIZE = 20 NODE_INSTANCE_TYPE = c1.xlarge NODE_IMAGE_ID = ami-8e38a4e7 # zipline specific PLUGINS = ipcluster [plugin ipcluster] SETUP_CLASS = starcluster.plugins.ipcluster.IPCluster

In [2]:

import time

In [4]:

tic = time.time()

!starcluster -c ~/.starcluster/config_pydata start pydata

toc = time.time()

StarCluster - (http://star.mit.edu/cluster) (v. 0.9999)

Software Tools for Academics and Researchers (STAR)

Please submit bug reports to starcluster@mit.edu

>>> Using default cluster template: pydata

>>> Validating cluster template settings...

>>> Cluster template settings are valid

>>> Starting cluster...

>>> Launching a 20-node cluster...

>>> Creating security group @sc-pydata...

Reservation:r-e5889e9f

>>> Waiting for cluster to come up... (updating every 30s)

>>> Waiting for all nodes to be in a 'running' state...

0/20 | | 0%

0/20 | | 0%

20/20 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Waiting for SSH to come up on all nodes...

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

2/20 |\\\\\\ | 10%

2/20 ||||||| | 10%

2/20 |////// | 10%

2/20 |------ | 10%

2/20 |\\\\\\ | 10%

2/20 ||||||| | 10%

2/20 |////// | 10%

2/20 |------ | 10%

2/20 |\\\\\\ | 10%

2/20 ||||||| | 10%

2/20 |////// | 10%

5/20 |---------------- | 25%

5/20 |\\\\\\\\\\\\\\\\ | 25%

9/20 |||||||||||||||||||||||||||||| | 45%

11/20 |/////////////////////////////////// | 55%

11/20 |----------------------------------- | 55%

15/20 |\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\ | 75%

15/20 ||||||||||||||||||||||||||||||||||||||||||||||||| | 75%

15/20 |//////////////////////////////////////////////// | 75%

15/20 |------------------------------------------------ | 75%

15/20 |\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\ | 75%

15/20 ||||||||||||||||||||||||||||||||||||||||||||||||| | 75%

15/20 |//////////////////////////////////////////////// | 75%

15/20 |------------------------------------------------ | 75%

20/20 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Waiting for cluster to come up took 1.542 mins

>>> The master node is ec2-75-101-213-6.compute-1.amazonaws.com

>>> Configuring cluster...

>>> Running plugin starcluster.clustersetup.DefaultClusterSetup

>>> Configuring hostnames...

0/20 | | 0%

6/20 |/////////////////// | 30%

20/20 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Creating cluster user: sgeadmin (uid: 1001, gid: 1001)

0/20 | | 0%

20/20 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Configuring scratch space for user(s): sgeadmin

0/20 | | 0%

18/20 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||| | 90%

20/20 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Configuring /etc/hosts on each node

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

0/20 | | 0%

15/20 |//////////////////////////////////////////////// | 75%

20/20 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Starting NFS server on master

>>> Configuring NFS exports path(s):

/home

>>> Mounting all NFS export path(s) on 19 worker node(s)

0/19 | | 0%

0/19 | | 0%

0/19 | | 0%

19/19 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Setting up NFS took 0.078 mins

>>> Configuring passwordless ssh for root

>>> Configuring passwordless ssh for sgeadmin

>>> Running plugin starcluster.plugins.sge.SGEPlugin

>>> Configuring SGE...

>>> Configuring NFS exports path(s):

/opt/sge6

>>> Mounting all NFS export path(s) on 19 worker node(s)

0/19 | | 0%

0/19 | | 0%

0/19 | | 0%

0/19 | | 0%

19/19 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Setting up NFS took 0.090 mins

>>> Installing Sun Grid Engine...

0/19 | | 0%

0/19 | | 0%

2/19 |------ | 10%

4/19 |\\\\\\\\\\\\\ | 21%

6/19 ||||||||||||||||||||| | 31%

8/19 |/////////////////////////// | 42%

8/19 |--------------------------- | 42%

12/19 |\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\ | 63%

18/19 ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| | 94%

19/19 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Creating SGE parallel environment 'orte'

0/20 | | 0%

20/20 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Adding parallel environment 'orte' to queue 'all.q'

>>> Running plugin ipcluster

>>> Writing IPython cluster config files

>>> Starting the IPython controller and 7 engines on master

>>> Waiting for JSON connector file... | /

/home/whyking/.starcluster/ipcluster/SecurityGroup:@sc-pydata-us-east-1.json 100% || Time: 00:00:00 87.61 M/s

>>> Authorizing tcp ports [1000-65535] on 0.0.0.0/0 for: IPython controller

>>> Adding 152 engines on 19 nodes

0/19 | | 0%

0/19 | | 0%

0/19 | | 0%

0/19 | | 0%

0/19 | | 0%

0/19 | | 0%

0/19 | | 0%

0/19 | | 0%

0/19 | | 0%

0/19 | | 0%

0/19 | | 0%

0/19 | | 0%

0/19 | | 0%

9/19 |////////////////////////////// | 47%

16/19 |----------------------------------------------------- | 84%

16/19 |\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\ | 84%

17/19 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||| | 89%

18/19 |//////////////////////////////////////////////////////////// | 94%

19/19 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> IPCluster has been started on SecurityGroup:@sc-pydata for user 'sgeadmin'

with 159 engines on 20 nodes.

To connect to cluster from your local machine use:

from IPython.parallel import Client

client = Client('/home/whyking/.starcluster/ipcluster/SecurityGroup:@sc-pydata-us-east-1.json', sshkey='/home/whyking/.ssh/mykey.rsa')

See the IPCluster plugin doc for usage details:

http://star.mit.edu/cluster/docs/latest/plugins/ipython.html

>>> IPCluster took 0.632 mins

>>> Configuring cluster took 2.485 mins

>>> Starting cluster took 4.077 mins

The cluster is now ready to use. To login to the master node

as root, run:

$ starcluster sshmaster pydata

If you're having issues with the cluster you can reboot the

instances and completely reconfigure the cluster from

scratch using:

$ starcluster restart pydata

When you're finished using the cluster and wish to terminate

it and stop paying for service:

$ starcluster terminate pydata

Alternatively, if the cluster uses EBS instances, you can

use the 'stop' command to shutdown all nodes and put them

into a 'stopped' state preserving the EBS volumes backing

the nodes:

$ starcluster stop pydata

WARNING: Any data stored in ephemeral storage (usually /mnt)

will be lost!

You can activate a 'stopped' cluster by passing the -x

option to the 'start' command:

$ starcluster start -x pydata

This will start all 'stopped' nodes and reconfigure the

cluster.

In [6]:

print "Took secs:", toc-tic

Took secs: 246.641336918

In [ ]:

from IPython.parallel import Client

client_sc = Client('/home/whyking/.starcluster/ipcluster/SecurityGroup:@sc-pydata-us-east-1.json', sshkey='/home/whyking/.ssh/mykey.rsa')

In [16]:

queue_sc = client_sc.direct_view()

print "Available workers: ", len(queue_sc)

Available workers: 157

In [2]:

queue_sc.map_sync(lambda x: x**2, [1, 2, 3, 4, 5, 6])

Out[2]:

[1, 4, 9, 16, 25, 36]

Putting it all together.

In [17]:

%%px

import sys

import logbook

zipline_logging = logbook.NestedSetup([

logbook.NullHandler(level=logbook.DEBUG, bubble=True),

logbook.StreamHandler(sys.stdout, level=logbook.INFO),

logbook.StreamHandler(sys.stderr, level=logbook.ERROR),

])

zipline_logging.push_application()

import numpy as np

import datetime

import zipline

from zipline.algorithm import TradingAlgorithm

STOCKS = ['AMD', 'CERN', 'COST', 'DELL', 'GPS', 'INTC', 'MMM']

class OLMAR(TradingAlgorithm):

def initialize(self, eps=1, window_length=5):

self.stocks = STOCKS

self.m = len(self.stocks)

self.price = {}

self.b_t = np.ones(self.m) / self.m

self.last_desired_port = np.ones(self.m) / self.m

self.eps = eps

self.init = True

self.days = 0

self.window_length = window_length

self.add_transform(zipline.transforms.MovingAverage, 'mavg', ['price'],

window_length=window_length)

self.set_slippage(zipline.finance.slippage.FixedSlippage())

self.set_commission(zipline.finance.commission.PerShare(cost=0))

def handle_data(self, data):

self.days += 1

if self.days < self.window_length:

return

if self.init:

self.rebalance_portfolio(data, self.b_t)

self.init = False

return

m = self.m

x_tilde = np.zeros(m)

b = np.zeros(m)

# find relative moving average price for each security

for i, stock in enumerate(self.stocks):

price = data[stock].price

# Relative mean deviation

x_tilde[i] = data[stock]['mavg']['price'] / price

###########################

# Inside of OLMAR (algo 2)

x_bar = x_tilde.mean()

# market relative deviation

mark_rel_dev = x_tilde - x_bar

# Expected return with current portfolio

exp_return = np.dot(self.b_t, x_tilde)

weight = self.eps - exp_return

variability = (np.linalg.norm(mark_rel_dev))**2

if variability == 0.0:

step_size = 0 # no portolio update

else:

step_size = max(0, weight/variability)

b = self.b_t + step_size*mark_rel_dev

b_norm = simplex_projection(b)

#print b_norm

self.rebalance_portfolio(data, b_norm)

# Predicted return with new portfolio

pred_return = np.dot(b_norm, x_tilde)

# update portfolio

self.b_t = b_norm

def rebalance_portfolio(self, data, desired_port):

#rebalance portfolio

desired_amount = np.zeros_like(desired_port)

current_amount = np.zeros_like(desired_port)

prices = np.zeros_like(desired_port)

if self.init:

positions_value = self.portfolio.starting_cash

else:

positions_value = self.portfolio.positions_value + self.portfolio.cash

for i, stock in enumerate(self.stocks):

current_amount[i] = self.portfolio.positions[stock].amount

prices[i] = data[stock].price

desired_amount = np.round(desired_port * positions_value / prices)

diff_amount = desired_amount - current_amount

for i, stock in enumerate(self.stocks):

self.order(stock, diff_amount[i]) #order_stock

def simplex_projection(v, b=1):

"""Projection vectors to the simplex domain

Implemented according to the paper: Efficient projections onto the

l1-ball for learning in high dimensions, John Duchi, et al. ICML 2008.

Implementation Time: 2011 June 17 by Bin@libin AT pmail.ntu.edu.sg

Optimization Problem: min_{w}\| w - v \|_{2}^{2}

s.t. sum_{i=1}^{m}=z, w_{i}\geq 0

Input: A vector v \in R^{m}, and a scalar z > 0 (default=1)

Output: Projection vector w

:Example:

>>> proj = simplex_projection([.4 ,.3, -.4, .5])

>>> print proj

array([ 0.33333333, 0.23333333, 0. , 0.43333333])

>>> print proj.sum()

1.0

Original matlab implementation: John Duchi (jduchi@cs.berkeley.edu)

Python-port: Copyright 2012 by Thomas Wiecki (thomas.wiecki@gmail.com).

"""

v = np.asarray(v)

p = len(v)

# Sort v into u in descending order

v = (v > 0) * v

u = np.sort(v)[::-1]

sv = np.cumsum(u)

rho = np.where(u > (sv - b) / np.arange(1, p+1))[0][-1]

theta = np.max([0, (sv[rho] - b) / (rho+1)])

w = (v - theta)

w[w<0] = 0

return w

start = datetime.datetime(2001, 8, 1)

end = datetime.datetime(2013, 2, 1)

data = zipline.utils.factory.load_from_yahoo(stocks=STOCKS, indexes={}, start=start, end=end)

data = data.dropna()

[stdout:0] AMD CERN COST DELL GPS INTC MMM [stdout:1] AMD CERN COST DELL GPS INTC MMM [stdout:2] AMD CERN COST DELL GPS INTC MMM [stdout:3] AMD CERN COST DELL GPS INTC MMM [stdout:4] AMD CERN COST DELL GPS INTC MMM [stdout:5] AMD CERN COST DELL GPS INTC MMM [stdout:6] AMD CERN COST DELL GPS INTC MMM [stdout:7] AMD CERN COST DELL GPS INTC MMM [stdout:8] AMD CERN COST DELL GPS INTC MMM [stdout:9] AMD CERN COST DELL GPS INTC MMM [stdout:10] AMD CERN COST DELL GPS INTC MMM [stdout:11] AMD CERN COST DELL GPS INTC MMM [stdout:12] AMD CERN COST DELL GPS INTC MMM [stdout:13] AMD CERN COST DELL GPS INTC MMM [stdout:14] AMD CERN COST DELL GPS INTC MMM [stdout:15] AMD CERN COST DELL GPS INTC MMM [stdout:16] AMD CERN COST DELL GPS INTC MMM [stdout:17] AMD CERN COST DELL GPS INTC MMM [stdout:18] AMD CERN COST DELL GPS INTC MMM [stdout:19] AMD CERN COST DELL GPS INTC MMM [stdout:20] AMD CERN COST DELL GPS INTC MMM [stdout:21] AMD CERN COST DELL GPS INTC MMM [stdout:22] AMD CERN COST DELL GPS INTC MMM [stdout:23] AMD CERN COST DELL GPS INTC MMM [stdout:24] AMD CERN COST DELL GPS INTC MMM [stdout:25] AMD CERN COST DELL GPS INTC MMM [stdout:26] AMD CERN COST DELL GPS INTC MMM [stdout:27] AMD CERN COST DELL GPS INTC MMM [stdout:28] AMD CERN COST DELL GPS INTC MMM [stdout:29] AMD CERN COST DELL GPS INTC MMM [stdout:30] AMD CERN COST DELL GPS INTC MMM [stdout:31] AMD CERN COST DELL GPS INTC MMM [stdout:32] AMD CERN COST DELL GPS INTC MMM [stdout:33] AMD CERN COST DELL GPS INTC MMM [stdout:34] AMD CERN COST DELL GPS INTC MMM [stdout:35] AMD CERN COST DELL GPS INTC MMM [stdout:36] AMD CERN COST DELL GPS INTC MMM [stdout:37] AMD CERN COST DELL GPS INTC MMM [stdout:38] AMD CERN COST DELL GPS INTC MMM [stdout:39] AMD CERN COST DELL GPS INTC MMM [stdout:40] AMD CERN COST DELL GPS INTC MMM [stdout:41] AMD CERN COST DELL GPS INTC MMM [stdout:42] AMD CERN COST DELL GPS INTC MMM [stdout:43] AMD CERN COST DELL GPS INTC MMM [stdout:44] AMD CERN COST DELL GPS INTC MMM [stdout:45] AMD CERN COST DELL GPS INTC MMM [stdout:46] AMD CERN COST DELL GPS INTC MMM [stdout:47] AMD CERN COST DELL GPS INTC MMM [stdout:48] AMD CERN COST DELL GPS INTC MMM [stdout:49] AMD CERN COST DELL GPS INTC MMM [stdout:50] AMD CERN COST DELL GPS INTC MMM [stdout:51] AMD CERN COST DELL GPS INTC MMM [stdout:52] AMD CERN COST DELL GPS INTC MMM [stdout:53] AMD CERN COST DELL GPS INTC MMM [stdout:54] AMD CERN COST DELL GPS INTC MMM [stdout:55] AMD CERN COST DELL GPS INTC MMM [stdout:56] AMD CERN COST DELL GPS INTC MMM [stdout:57] AMD CERN COST DELL GPS INTC MMM [stdout:58] AMD CERN COST DELL GPS INTC MMM [stdout:59] AMD CERN COST DELL GPS INTC MMM [stdout:60] AMD CERN COST DELL GPS INTC MMM [stdout:61] AMD CERN COST DELL GPS INTC MMM [stdout:62] AMD CERN COST DELL GPS INTC MMM [stdout:63] AMD CERN COST DELL GPS INTC MMM [stdout:64] AMD CERN COST DELL GPS INTC MMM [stdout:65] AMD CERN COST DELL GPS INTC MMM [stdout:66] AMD CERN COST DELL GPS INTC MMM [stdout:67] AMD CERN COST DELL GPS INTC MMM [stdout:68] AMD CERN COST DELL GPS INTC MMM [stdout:69] AMD CERN COST DELL GPS INTC MMM [stdout:70] AMD CERN COST DELL GPS INTC MMM [stdout:71] AMD CERN COST DELL GPS INTC MMM [stdout:72] AMD CERN COST DELL GPS INTC MMM [stdout:73] AMD CERN COST DELL GPS INTC MMM [stdout:74] AMD CERN COST DELL GPS INTC MMM [stdout:75] AMD CERN COST DELL GPS INTC MMM [stdout:76] AMD CERN COST DELL GPS INTC MMM [stdout:77] AMD CERN COST DELL GPS INTC MMM [stdout:78] AMD CERN COST DELL GPS INTC MMM [stdout:79] AMD CERN COST DELL GPS INTC MMM [stdout:80] AMD CERN COST DELL GPS INTC MMM [stdout:81] AMD CERN COST DELL GPS INTC MMM [stdout:82] AMD CERN COST DELL GPS INTC MMM [stdout:83] AMD CERN COST DELL GPS INTC MMM [stdout:84] AMD CERN COST DELL GPS INTC MMM [stdout:87] AMD CERN COST DELL GPS INTC MMM [stdout:88] AMD CERN COST DELL GPS INTC MMM [stdout:89] AMD CERN COST DELL GPS INTC MMM [stdout:90] AMD CERN COST DELL GPS INTC MMM [stdout:91] AMD CERN COST DELL GPS INTC MMM [stdout:92] AMD CERN COST DELL GPS INTC MMM [stdout:93] AMD CERN COST DELL GPS INTC MMM [stdout:94] AMD CERN COST DELL GPS INTC MMM [stdout:95] AMD CERN COST DELL GPS INTC MMM [stdout:96] AMD CERN COST DELL GPS INTC MMM [stdout:97] AMD CERN COST DELL GPS INTC MMM [stdout:98] AMD CERN COST DELL GPS INTC MMM [stdout:99] AMD CERN COST DELL GPS INTC MMM [stdout:100] AMD CERN COST DELL GPS INTC MMM [stdout:101] AMD CERN COST DELL GPS INTC MMM [stdout:102] AMD CERN COST DELL GPS INTC MMM [stdout:103] AMD CERN COST DELL GPS INTC MMM [stdout:104] AMD CERN COST DELL GPS INTC MMM [stdout:105] AMD CERN COST DELL GPS INTC MMM [stdout:106] AMD CERN COST DELL GPS INTC MMM [stdout:107] AMD CERN COST DELL GPS INTC MMM [stdout:108] AMD CERN COST DELL GPS INTC MMM [stdout:109] AMD CERN COST DELL GPS INTC MMM [stdout:110] AMD CERN COST DELL GPS INTC MMM [stdout:111] AMD CERN COST DELL GPS INTC MMM [stdout:112] AMD CERN COST DELL GPS INTC MMM [stdout:113] AMD CERN COST DELL GPS INTC MMM [stdout:114] AMD CERN COST DELL GPS INTC MMM [stdout:115] AMD CERN COST DELL GPS INTC MMM [stdout:116] AMD CERN COST DELL GPS INTC MMM [stdout:117] AMD CERN COST DELL GPS INTC MMM [stdout:118] AMD CERN COST DELL GPS INTC MMM [stdout:119] AMD CERN COST DELL GPS INTC MMM [stdout:120] AMD CERN COST DELL GPS INTC MMM [stdout:121] AMD CERN COST DELL GPS INTC MMM [stdout:122] AMD CERN COST DELL GPS INTC MMM [stdout:123] AMD CERN COST DELL GPS INTC MMM [stdout:124] AMD CERN COST DELL GPS INTC MMM [stdout:125] AMD CERN COST DELL GPS INTC MMM [stdout:126] AMD CERN COST DELL GPS INTC MMM [stdout:127] AMD CERN COST DELL GPS INTC MMM [stdout:128] AMD CERN COST DELL GPS INTC MMM [stdout:129] AMD CERN COST DELL GPS INTC MMM [stdout:130] AMD CERN COST DELL GPS INTC MMM [stdout:131] AMD CERN COST DELL GPS INTC MMM [stdout:132] AMD CERN COST DELL GPS INTC MMM [stdout:133] AMD CERN COST DELL GPS INTC MMM [stdout:134] AMD CERN COST DELL GPS INTC MMM [stdout:135] AMD CERN COST DELL GPS INTC MMM [stdout:136] AMD CERN COST DELL GPS INTC MMM [stdout:137] AMD CERN COST DELL GPS INTC MMM [stdout:138] AMD CERN COST DELL GPS INTC MMM [stdout:139] AMD CERN COST DELL GPS INTC MMM [stdout:140] AMD CERN COST DELL GPS INTC MMM [stdout:141] AMD CERN COST DELL GPS INTC MMM [stdout:142] AMD CERN COST DELL GPS INTC MMM [stdout:143] AMD CERN COST DELL GPS INTC MMM [stdout:144] AMD CERN COST DELL GPS INTC MMM [stdout:145] AMD CERN COST DELL GPS INTC MMM [stdout:146] AMD CERN COST DELL GPS INTC MMM [stdout:147] AMD CERN COST DELL GPS INTC MMM [stdout:148] AMD CERN COST DELL GPS INTC MMM [stdout:149] AMD CERN COST DELL GPS INTC MMM [stdout:150] AMD CERN COST DELL GPS INTC MMM [stdout:151] AMD CERN COST DELL GPS INTC MMM [stdout:152] AMD CERN COST DELL GPS INTC MMM [stdout:153] AMD CERN COST DELL GPS INTC MMM [stdout:154] AMD CERN COST DELL GPS INTC MMM [stdout:155] AMD CERN COST DELL GPS INTC MMM [stdout:156] AMD CERN COST DELL GPS INTC MMM [stdout:157] AMD CERN COST DELL GPS INTC MMM [stdout:158] AMD CERN COST DELL GPS INTC MMM

In [18]:

def run_olmar(params):

olmar = OLMAR(eps=params[0], window_length=params[1])

results = olmar.run(data)

return results.portfolio_value.mean()

In [12]:

eps = np.linspace(1.1, 40, len(queue_sc))

print eps

[ 1.1 1.89387755 2.6877551 3.48163265 4.2755102 5.06938776 5.86326531 6.65714286 7.45102041 8.24489796 9.03877551 9.83265306 10.62653061 11.42040816 12.21428571 13.00816327 13.80204082 14.59591837 15.38979592 16.18367347 16.97755102 17.77142857 18.56530612 19.35918367 20.15306122 20.94693878 21.74081633 22.53469388 23.32857143 24.12244898 24.91632653 25.71020408 26.50408163 27.29795918 28.09183673 28.88571429 29.67959184 30.47346939 31.26734694 32.06122449 32.85510204 33.64897959 34.44285714 35.23673469 36.03061224 36.8244898 37.61836735 38.4122449 39.20612245 40. ]

In [8]:

wealth = queue_sc.map_sync(run_olmar, eps)

In [9]:

plt.plot(eps, wealth)

Out[9]:

[<matplotlib.lines.Line2D at 0xcf829ec>]

Optimization techniques

Bayesian Optimization via Gaussian Processes.

See Snoek, J., Larochelle, H., & Adams, R. P. (2012). Practical Bayesian Optimization of Machine Learning Algorithms, 1–12. Machine Learning; Learning. Retrieved from http://arxiv.org/abs/1206.2944

In [ ]:

# define a search space

from hyperopt import hp

space = hp.choice('a',

[

('case 1', 1 + hp.lognormal('c1', 0, 1)),

('case 2', hp.uniform('c2', -10, 10))

])

Conclusions

- Optimizing large-scale models is a different game.

- Technical solutions:

- IPython Parallel

- StarCluster

- Methodological solutions:

- Can borrow from Machine Learning.

Thanks!

Thomas Wiecki

email: twiecki@quantopian.com

Twitter: @twiecki, @quantopian

GitHub: twiecki